- Docker Slow Ext4 Partition Download

- Docker Slow Ext4 Partition Windows 10

- Docker Slow Ext4 Partition File

- Docker Slow Ext4 Partition Tool

Optimize-VHD: Failed to compact the virtual disk. The system failed to compact 'C: Users ZVICAHANA AppData Local Docker wsl data ext4.vhdx'. Failed to compact the virtual disk. The system failed to compact 'C: Users ZVICAHANA AppData Local Docker wsl data ext4.vhdx': The process cannot access the file because it is being used by another process. When you do create a new partition it is highly suggested that you create an ext3 or ext4 partition to house your new home directory. Find the uuid of the Partition. The uuid (Universally Unique Identifier) reference for all partitions can be found by opening a command-line to type the following: sudo blkid. Alternative Method.

This is going to be a multipart-series of how to setup the ULTIMATE WSL2 and Docker environment, to get the most out of it, during your everyday developer’s life.

Now, let’s first tackle the meaning of ULTIMATE, since everyone has it’s own interpretation on what it means. S

- Reliable – The setup has to be bulletproof, in case of system failures or data loss, and it has to be organized, so that everything is on it’s proper place.

- Fast – Working on projects, especially web projects, where you may have to reload a site multiple times to see changes, has to perform like a bare-metal setup

- Convenient – There’s nothing more frustrating than having to turn knobs across multiple places, to get the most basic things to work.

Now that we’re on the same page about the meaning of ULTIMATE, let’s get started!

The problem with the initial WSL2 setup

Let’s start this series off with the proper setup of WSL2.

I assume that you already have a successfully running WSL2 distro on your Windows machine. If you installed the distro from the Microsoft Store, it’s probably located somewhere in your AppData folder, God knows where.

Also, you may be working on your fancy NodeJS server, which is probably located under /mnt/c/Users/<username>/Projects/NodeJS/my-most-paying-client or even /mnt/d if you’re on the secure side and have your sensitive data NOT stored on C: .

So, there are two problems here.

First, remember when I said it has to be reliable. Well, that’s absolutely not the case here. Since Windows can crash, and your AppData folder cannot be moved outside of C:Usersusername , you’re most likely to lose all your hard-efforts you put into setting up your distro.

The second issue is speed. As you probably may know (or may not know): to get the best performance out of WSL2 (which is near bare-metal) you MUST store your project-files inside WSL2, like /home/<username> .

The ULTIMATE solution

Fortunately we can tackle both of these problems with some setup.

Recently, WSL has introduced a nice feature --mount, which allows you to mount ext4 disk partitions into WSL and access them natively like you would do with any other ext4 partition. This also comes with a huge performance benefit, eliminating the need of the slow P9 filesystem.

- Create an empty vhd-file and format it as ext4

- Mount the vhd-file into WSL as a partition

- Move all projects into that partition

- Symlink the partition as our /home/<username> folder

1. Creating the virtual hard disk (VHD)

First you need to create a virtual disk. You can easily do that with Windows Disk Management. Make sure to create a dynamically expanding disk, which will slowly grow in size as you copy files to it.

If you’re a power-user, you probably have a separate partition for your projects, images, etc. Make sure to save the newly created virtual disk somewhere save, in case of a system crash. Also make sure to include it into your backup routine.

2. Formatting VHD to ext4

There are many ways on how to format a disk to ext4 under Windows. The easiest I’ve found so far, is AOMEI Partition Assistant which comes with a free trial, and is enough for this purpose (not sponsored).

Simply open up AOMEI Partition Assistant and create an ext4 partition on your newly created and mounted disk.

3. Mounting VHD into WSL2

Now you should be able to access the disk under /mnt/wsl/dataDisk and see a lost+found folder in there.

You may also want to save this script somewhere on your computer and run it with Task Scheduler every time you login into your PC.

Simply add this line into the action of Start a program :

-ExecutionPolicy Bypass -File D:path_tovhd.ps1

4. Migrating user data to VHD

Let’s create our user folder there, move all our /home/<username>/* files into the new folder and symlink it.

The ultimate bonus

Now that you have migrated your user data to the VHD, let’s tackle the convenient part of the ULTIMATE setup. This allows you to switch your Linux distros as you wish, while keeping all your data in one central place.

You want to run Kali alongside Ubuntu while still being able to access your user data? This comes in handy, if sometimes things don’t work as expected and you want to make sure if the problem is in Ubuntu or elsewhere. Just make sure to have the same username as you have on Ubuntu and execute these commands.

I hope you learned something, and stay tuned for the next part, where I’ll cover the proper setup of Docker inside WSL2.

Part 2

Continue to the second part:

We recently bought a new set of laptops to Debricked, and since I’m a huge fan of Arch Linux, I decided to use it as my main operating system. The laptop in question, a Dell XPS 15 7590, is equipped with both an Intel CPU with built-in graphics, as well as a dedicated Nvidia GPU. You may wonder why this is important to mention, but at the end of this article, you’ll know. For now, let’s discuss the performance issues I quickly discovered.

Docker performance issues

Our development environment makes heavy use of Docker containers, with local folders bind mounted into the containers. While my new installation seemed snappy enough during regular browsing, I immediately noticed something was wrong when I had setup my development environment. One of the first steps is to recreate our test database. This usually takes around 3-4 minutes, but I can admit I was eagerly looking forward to benchmark how fast this would be on my new shiny laptop.

Docker Slow Ext4 Partition Download

After a minute I started to realize that something was terribly wrong.

16 minutes and 46 seconds later, the script had finished, and I was disappointed. Recreating the database was almost five times as slow as on my old laptop! Running the script again using time ./recreate_database.sh, I got the following output:

What stands out is the extreme amount of time spent in kernel space. What is happening here? A quick check on my old laptop, for reference, showed that it only spent 4 seconds in kernel space for the same script. Clearly something was way off with the configuration of my new installation.

Debug all things: Disk I/O

My initial thought was that the underlying issue was with my partitioning and disk setup. All that time in the kernel must be spent on something, and I/O wait seemed like the most likely candidate. I started by checking the Docker storage driver, since I’ve heard that the wrong driver can severely affect the performance, but no, the storage driver was overlay2, just as it was supposed to be.

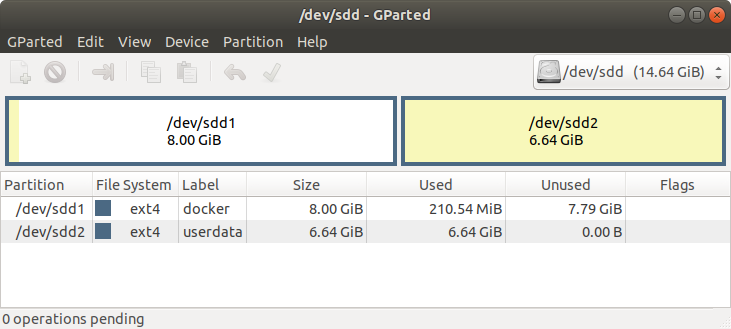

The partition layout of the laptop was a fairly ordinary LUKS+LVM+XFS layout, to get a flexible partition scheme with full-disk encryption. I didn’t see a particular reason why this wouldn’t work, but I tested several different options:

- Using ext4 instead of XFS,

- Create an unencrypted partition outside LVM,

- Use a ramdisk

After using a ramdisk, and still getting an execution time of 16 minutes, I realised that clearly disk I/O can’t be the culprit.

What’s really going on in the kernel?

After some searching, I found out about the very neat perf top tool, which allows profiling of threads, including threads in the kernel itself. Very useful for what I’m trying to do!

Firing up perf top at the same time as running the recreate_database.sh script yielded the following very interesting results, as can be seen in the screenshot below.

That’s a lot of time spent in read_hpet which is the High Precision Event Timer. A quick check on my other computers showed that no other computer had the same behaviour. Finally I had some clue on how to proceed.

The solution

While reading up on the HPET was interesting on its own, it didn’t really give me an immediate answer to what was happening. However, in my aimless, almost desperate, searching I did stumble upon a couple of threads discussing the performance impact of having HPET either enabled or disabled when gaming.

While not exactly related to my problem – I simply want my Docker containers to work, not do any high performance gaming – I did start to wonder which of the graphics cards that was actually being used on my system. After installing Arch, the graphical interface worked from the beginning without any configuration, so I hadn’t actually selected which driver to use: the one for the integrated GPU, or the one for the dedicated Nvidia card.

After running lsmod to list the currently loaded kernel modules, I discovered that modules for both cards were in fact loaded, in this case both i915 and nouveau. Now, I have no real use for the dedicated graphics card, so having it enabled would probably just draw extra power. So, I blacklisted the modules related to nouveau by adding them to /etc/modprobe.d/blacklist.conf, in this case the following modules:

Upon rebooting the computer, I confirmed that only the i915 module was loaded. To my great surprise, I also noticed that perf top no longer showed any significant time spent in read_hpet. I immediately tried recreating the database again, and finally I got the performance boost I wanted from my new laptop, as can be seen below:

As you can see, almost no time is spent in kernel space, and the total time is now faster than the 3-4 minutes of my old laptop. Finally, to confirm, I whitelisted the modules again, and after a reboot the problem was back! Clearly the loading of nouveau causes a lot of overhead, for some reason still unknown to me.

Docker Slow Ext4 Partition Windows 10

Conclusion

Docker Slow Ext4 Partition File

So there you go, apparently having the wrong graphics drivers loaded can make your Docker containers unbearably slow. Hopefully this post can help someone else in the same position as me to get their development environment up and running at full speed.